- Indico style

- Indico style - inline minutes

- Indico style - numbered

- Indico style - numbered + minutes

- Indico Weeks View

11th Polish Symposium on Physics in Economy and Social Sciences

→

Europe/Warsaw

ONLINE

ONLINE

Description

Post-conference publications

Every registered participant of Symposiom may wish to submit full paper for consideration of its publication in topical issues of Entropy:

The papers will be refereed by the standard method. In case of acceptance the 20% discount of article processing charge will be applied for registered participant of the conference.

Best poster award

An award (300 CHF) sponsored by Entropy journal for the best poster

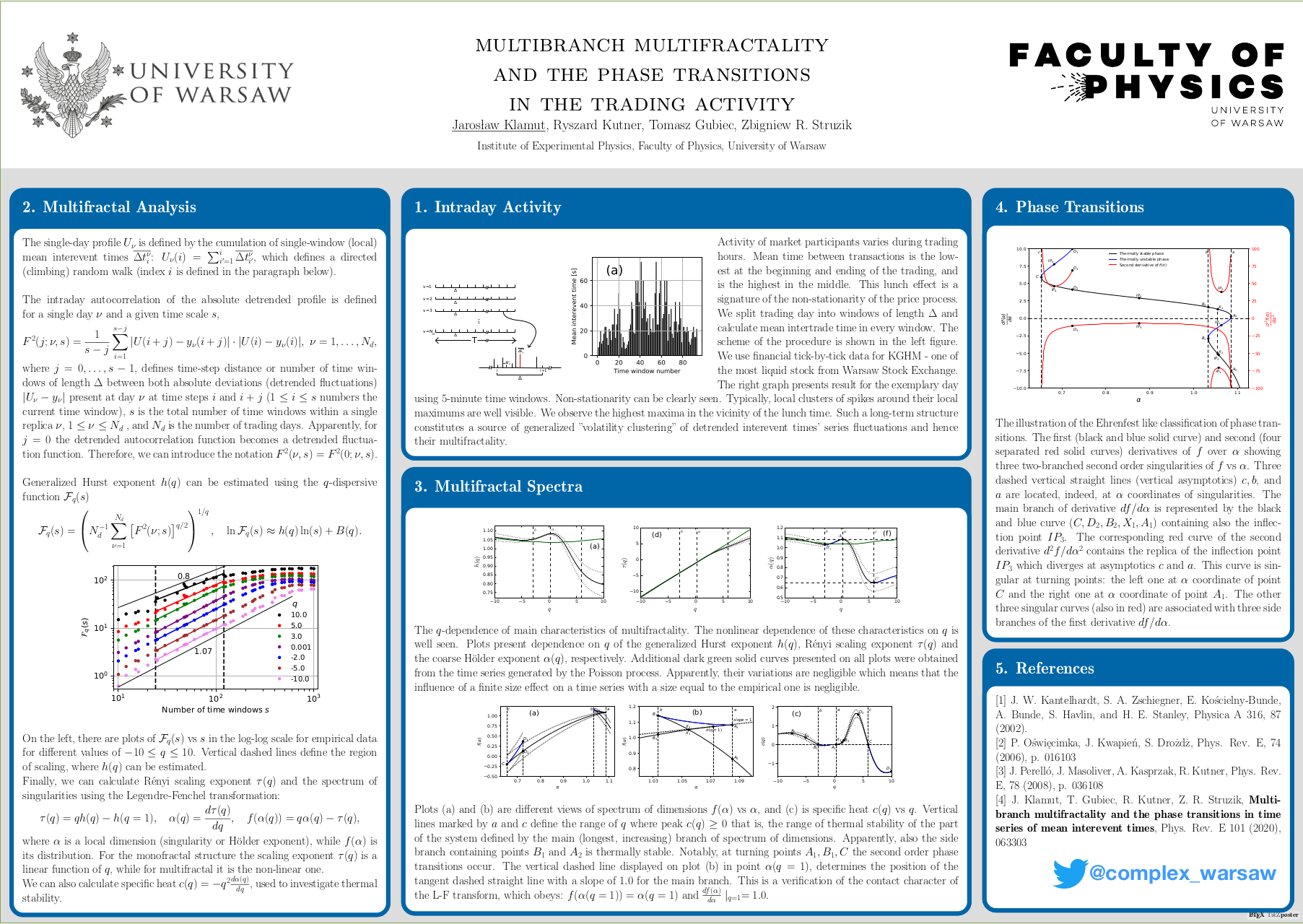

went to Mr. Jarosław Klamut for presentation of poster

entitled Multibranch multifractality and the phase transitions in the trading activity by J. Klamut, R. Kutner, T. Gubiec, and Z. R. Struzik.

General info

The Polish Symposium on Physics in Economy and Social Sciences (FENS - Fizyka w Ekonomii i Naukach Społecznych) has a traditon of gathering physicists, economist and social scientists interested in application of physical methods in economy and social sciences. The Symposium is lead by

- Section of Physics in Economy and Social Sciences, Polish Physical Society

in cooperation with:

- Faculty of Physics and Applied Computer Science, AGH University of Science and Technology;

- Faculty of Physics, Astronomy and Applied Computer Science, Jagiellonian University;

- Institute of Nuclear Physics, Polish Academy of Sciences;

- Faculty of Physics, University of Warsaw.

The Symposium focuses on:

- Structure and evolution of complex networks in socio-economic context.

- Role of complex adaptive systems in socio-economic phenomena and processes.

- Dynamic of conflicts and social polarisation.

- Behavioural modelling and irrational choice, the role of random chance and information in economic and social phenomena.

- Modelling of opinion evolution and the spread of innovation.

- Complexity and emergence in socio-economic systems.

- Computational methods in economy and social sciences.

- Financial time series: equilibrium and non-equilibrium properties, linear and non-linear, fractal and multifractal, memory effects, correlations and dependencies, non-stationarity.

- Random matrix theory.

- Game theory.

- Algorithmic value investing on stock market.

- Thermodynamic formalism in economy.

- Role of non-extensiveness on financial markets.

- Role of extreme and superextreme events on financial markets.

- Risk management and propagation of risk vs. share of wallet; financial engineering.

- Models of market dynamics, especially agent-based models in micro- and macroscale.

Invited speakers

- Stanisław Drożdż (Institute of Nuclear Physics Polish Academy of Sciences and Cracow University of Technology, Poland)

- Serge Galam (Centre for Political Research, Sciences Po, and CNRS, France)

- Shlomo Havlin (Department of Physics, Bar-Ilan University, Ramat-Gan, Israel)

- Janusz Hołyst (Faculty of Physics, Warsaw University of Technology, Poland)

- Adam Kleczkowski (University of Strathclyde, Scotland)

- Krzysztof Kułakowski (Faculty of Physics and Applied Computer Science, AGH University of Science and Technology, Poland)

- Fabrizio Lillo (Dipartimento di Matematica, Università di Bologna, Italy)

- Thomas Lux (Department of Economics, University of Kiel, Germany)

- Rosario N. Mantegna (Palermo University, Italy)

- Tiziana Di Matteo (King’s College London, UK)

- Ryszard Kutner (Faculty of Physics, University of Warsaw, Poland)

- Frank Schweitzer (ETH Zürich, Switzerland)

- Didier Sornette (ETH Zürich, Switzerland)

- Katarzyna Sznajd-Weron (Wrocław Technical University, Poland)

- Boleslaw Szymanski (Rensselaer Polytechnic Institute, Troy, NY, USA)

Organizing committee

- Bartłomiej Dybiec (Jagiellonian University)

- Przemysław Gawroński (AGH University of Science and Technology)

- Małgorzata Krawczyk (AGH University of Science and Technology)

- Jarosław Kwapień (The Henryk Niewodniczański Institute of Nuclear Physics, Polish Academy of Sciences)

- Krzysztof Malarz (AGH University of Science and Technology)

- Paweł Oświęcimka (The Henryk Niewodniczański Institute of Nuclear Physics, Polish Academy of Sciences)

Scientific committee

- Marcel Ausloos (University of Leicester)

- Dariusz Grech (University of Wrocław)

- Shlomo Havlin (Bar-Ilan University)

- Janusz Hołyst (Warsaw University of Technology)

- Ryszard Kutner (University of Warsaw)

- Rosario N. Mantegna (Central European University & Palermo University)

- Tiziana Di Matteo (King’s College London)

- Maciej Nowak (Jagiellonian University)

- Arkadiusz Orłowski (Warsaw University of Life Sciences & Polish Academy of Sciences)

- Enrico Scalas (University of Sussex)

- Christopher Schinckus (University of Leicester)

- Didier Sornette (ETH Zurich)

- Zbigniew Struzik (Tokyo University)

- Katarzyna Sznajd-Weron (Wrocław Technical University)

- Magdalena Wojcieszak (University of California)

Fees

There is no Conference fee

Deadlines

Registration (closed): June 15, (extended) June 22, 2021 (number of participants is limited)

Abstract acceptance (closed): May 31, 2021

Abstract submission (closed): April 30, 2021

Satellite events

- on Wednesday, June, the 30th, SFINKS@FENS11 Warm-Up will take place. The warm-up is a one-day school (for students and early-stage researchers) which offers several tutorials on various aspects of scientific work and a quiz with prizes;

- on Saturday, July, the 3rd, at 2 PM, during the closing ceremony, the results of biennale competition for the best doctoral, master's and bachelor's theses organized by the Polish Physical Society Section Physics in Economy and Social Sciences will be annouced;

- on Saturday, July, the 3rd, at 3 PM the Open Meeting of the Polish Physical Society Section Physics in Economy and Social Sciences will take place.

Previous FENS Conferences

- FENS 2004 (Warszawa) — Acta Physica Polonica B 36(8), (2005)

- FENS 2006 (Kraków) — Acta Physica Polonica B 37(11), (2006)

- FENS 2007 (Wrocław) — Acta Physica Polonica A 114(3), (2008)

- FENS 2009 (Rzeszów) — Acta Physica Polonica A 117(4), (2010)

- FENS 2010 (Warszawa) — Acta Physica Polonica A 121(2-B), (2012)

- FENS 2012 (Gdańsk) — Acta Physica Polonica A 123(3), (2013)

- FENS 2014 (Lublin) — Acta Physica Polonica A 127(3-A), (2015)

- FENS 2015 (Rzeszów) — Acta Physica Polonica A 129(5), (2016)

- FENS 2017 (Warszawa)

- FENS 2019 (Otwock-Świerk) — Acta Physica Polonica A 138(1), (2020)

- FENS 2021 (

KrakówOn-line)- Entropy: Three risky decades: A time for econophysics?

- Entropy: Entropy and social physics

Organizers

Participants

Adam Kleczkowski

Adil Nawaz

Aleksejus Kononovicius

Alessio Catanzaro

Allan Vieira

Andres Garcia Medina

Andrey Leonidov

Angelika Abramiuk-Szurlej

Anna Carbone

Annalisa Caligiuri

Anton Heckens

Arkadiusz Jędrzejewski

Arkadiusz Lipiecki

Arkadiusz Orłowski

Bartlomiej Dybiec

Bartłomiej Nowak

Benedetto Di Ruzza

Benito Rodriguez Camejo

Boleslaw Szymanski

Christos Charalambous

Czesław Mesjasz

Didier Sornette

Dorota Żuchowska-Skiba

Dragos Bozdog

Everton Abreu

Fabrizio Lillo

Francesca Giuffrida

Frank Schweitzer

Geza Tóth

Giuseppe Brandi

Grzegorz Siudem

Henrique Boneto

Hiroyasu Inoue

Ida Knapik

Jakub Pawłowski

Jana Meles

Janusz Hołyst

Janusz Miśkiewicz

Jarosław Klamut

Jarosław Kwapień

Jhordan Silveira de Borba

Jianxi Gao

Joanna Landmesser

Joanna Linczuk

Jose Roberto Iglesias

Juergen Mimkes

Julian Neñer

Karol Capała

Katarzyna Sznajd-Weron

Krzysztof Kułakowski

Krzysztof Malarz

Krzysztof Suchecki

Krzysztof Twardoch

Linda Ponta

Maciej Doniec

Maciej Pawlik

Maciej Wołoszyn

Marcel Półkośnik

Marcelo Ribeiro

Marcin Wątorek

Marek Karwanski

Marek Stanuszek

Maria Stojkow

Maria Tsouchnika

Martin Crane

María Fabiana Laguna

Mateusz Gala

Mateusz Ozimek

Mateusz Wilinski

Małgorzata Krawczyk

Michał Bernardelli

Michał Chorowski

Mikhail Kanevski

Mikołaj Szurlej

Patryk Bojarski

Pawel Sobkowicz

Paweł Oświęcimka

Pietro Murialdo

Piotr Górski

Przemysław Gawroński

Przemysław Nowak

Rafal Olszowski

Raul Garcés

Robert Jankowski

Robert Paluch

Rosario Mantegna

Ryszard Kutner

Sabin Hashmi

Savitri Maharaj

Sebastian Gonçalves

Serge Galam

Shanshan Wang

shash son

Shlomo Havlin

Stanisław Drożdż

Sudhir Jain

Tai Mai

Teo Silva

Thiago Dias

Thomas Lux

Tiziana Di Matteo

Tomasz Gubiec

Tomasz Raducha

Urszula Grzybowska

Wojciech Radosz

Yuriy Stepanov

Zbigniew Struzik

Zdzisław Burda

Zoltan Neda

Zuzanna Kalinowska

Łukasz Gajewski

FENS 2021

-

-

Conference openingConvener: Ryszard Kutner (Faculty of Physics University of Warsaw)

-

S1Convener: Ryszard Kutner (Faculty of Physics University of Warsaw)

-

1

Network science and application to socio-economic systems

Network science has been successfully applied in recent years in many worldwide systems and processes. These include financial systems [1,2] and social systems [3,4]. I will present and discuss some examples including (i) how network science can be useful to distinguish between fake news and real news [3], (ii) how to identify bottlenecks in urban traffic [5], (iii) how scientists switch between research topics during their career [4] and (iv) what are the advantages of working in fresh teams [6].

References:

[1] A. Majdandzic et al, Nature Physics 10 (1), 34 (2014); Nature Comm. 7, 10850 (2016)

[2] Y. Kobayashi et al, New J. of Physics, 21, 043038 (2019)

[3] Z. Zhao et al, EPJ Data Science 9 (1), 7 (2020)

[4] A. Zeng et al, Nature Communications 10 (1), 1-11 (2019)

[5] Daqing Li at al, PNAS 112, 669 (2015); G. Zheng et al, PNAS 116, 23 (2019)

[6] A. Zeng et al, Nature Human Behaviour, 1-9 (2021)Speaker: Shlomo Havlin (Bar-Ilan University) -

2

Social nucleation: From physics to group formation and opinion polarization

Individuals form groups, which subsequently develop larger domains via competition and coalescence. How much have these social processes in common with established mechanisms of phase transitions in physics? Are nucleation in metastable systems or spinodal decomposition of thermodynamic phases or percolation in porous media suitable paradigms for modeling the emergence of large social groups? We answer this challenging question by providing an agent-based model that combines group formation and opinion dynamics in a novel manner. Opinion formation determines the formation of groups which can form larger clusters of various numbers, density and stability. These clusters can merge, split or rearrange, to develop either compact phases, networks of high modularity, or quasistable cluster distributions. Dependent on the choice of parameters for opinion dynamics and social influence, our model can reproduce social phenomena such as consensus, community formation, weak or strong polarization, sparse social structures or stable minorities.

Speaker: Frank Schweitzer (ETH Zürich) -

3

How accidental scientific success is?

Starting with classical work of Barabási and Albert, the issue with preferential attachment rules is a fundamental problem for modeling evolving networks. Linear preferential attachment rule (also called rich get richer rule or Matthew effect) is the most popular mechanism that leads to power-law vertex degree distribution. However, original preferential attachment rule that appears in Barabási-Albert Network is not enough to describe real citation network.

Thus we want to discuss how to modify preferential attachment rule so it can better match to bibliometric data – by the example of number of citation papers receive. First of all, we will discuss case of initial attractiveness of the nodes, so the preferential attachment rule will only apply to received citation. It perfectly describe the idea of “how good” is given paper, compared to using all citations (both received and given) that would appear in “pure” preferential attachment. Secondly we add random attachment rule as a case when some of citation is given randomly, opposite to the preferential attachment.

Model constructed that way is being analyzed from different perspectives. We calculated node degree distribution using Master equation. Moreover we compare this with results obtained from series of simulations. Last but not least thing is computing parameters that appears in this model with real data - number of citation from DBLP.

Speaker: Przemysław Nowak (Warsaw University of Technology, Faculty of Physics) -

4

How to model a single scientist track record?

Bibliometric research often focuses on the extreme levels of granularity. On the one hand, it focuses on the analysis of individual papers, from the other discussing the features of the entire citation network, losing an intermediate stage of the achievements of one scientist. During the talk, I will try to present the known models of such track records. We will consider how to construct the models and assess their fit with empirical data. In conclusion, we will consider practical decisions which result from the implementation of such models.

Speaker: Grzegorz Siudem (Warsaw University of Technology)

-

1

-

S2Convener: Paweł Sobkowicz

-

5

Financial return distributions across markets and time scales

The dynamics of price changes involves very complex processes and constitutes one of the central issues in Econophysics. The functional forms of return distributions considered and reported in the literature include the Levy distribution and its truncated variant, power-laws and, in particular, its inverse-cubic case, the q-Gaussians and the stretched exponentials. These may vary among the financial instruments and even for the same instrument typically change with the time scale of aggregation. The present contribution is an attempt to provide a unified view on the related effects for different world markets, also from the historical perspective. Special focus is put on those quantitative characteristics of the return distibutions that are common to all the markets.

Speaker: Stanisław Drożdż (Institute of Nuclear Physics Polish Academy of Sciences and Cracow University of Technology, Poland) -

6

Origin of multiscaling in finance and robust and statistically significant estimators

The multiscaling behaviour of financial time-series is one of the acknowledged stylized facts in the literature [1]. The source of the measured multifractality in financial markets has been long debated [2,3]. In this talk I will discuss the origin of multiscaling in financial time-series, investigate how to best quantify it [4,5] and I will introduce a new methodology that provides a robust estimation and tests the multi-scaling property in a statistically significant way [6].

I will show results on the application of the Generalized Hurst exponent tool to different financial time-series, and I will show the powerfulness of such tool to detect changes in markets’ behaviours, to differentiate markets accordingly to their degree of development, to asses risk and to provide a new tool for forecasting [7]. I will also show an empirical relationship, to our knowledge the first on in the literature, which links a univariate property, i.e. the degree of multiscaling behaviour of a time series, to a multivariate one, i.e. the average correlation of the stock log-returns with the other stocks traded in the same market and discuss its implications [8].[1] T. Di Matteo, Q. Finance 7 (2007) 21

[2] J. W Kantelhardt et al, Physica A 316 (2002) 87

[3] J. Barunik, T. Aste, T. Di Matteo, R. Liu, Physica A 391 (2012) 4234

[4] R. J. Buonocore, T. Aste, T. Di Matteo, Chaos, Solitons and Fractals 88 (2016) 38

[5] R. J. Buonocore, T. Di Matteo, T. Aste, Phys. Rev. E 95 (2017) 042311

[6] G. Brandi, T. Di Matteo, Eur. J. Finance (2021) DOI: 10.1080/1351847X.2021.1908391

[7] I. P. Antoniades, G. Brandi, L. G. Magafas, T. Di Matteo, Physica A 565 (2021) 12556

[8] R. J. Buonocore, G. Brandi, R. N. Mantegna, T. Di Matteo, Q. Finance 20 (2020) 133Speaker: Tiziana Di Matteo (Department of Mathematics - King's College London) -

7

Evolving network analysis of S&P500 components. Covid19 influence on cross-correlation network structure.

Interaction is the basic feature of economic systems. Although it is possible to imagine a primitive self-sustained tribe, in the case of a developed economy the interaction (in positive and negative sense i.e. cooperation and competition) are a crucial factor of development. Those interactions are influenced by some events or the state of the systems. The special cases are global events such as crises or recently the Covid19 pandemic. In the present work changes in the correlation structure of economic interactions are investigated, particularly the economy network features. The easiest achievable and reliable characteristic of the company is its value, particularly in the case of the companies quoted on a stokes market. The study analyses evolution of the network of companies quoted on New York stokes components being the components of the S&P500 index. In the analysis, the returns of daily logarithmic returns are considered. In the analysis, the distance matrices are calculated using a sliding time window for the chosen set of time windows sizes (i.e. 5, 10 and 20 days) and for each of the distance matrix the corresponding network is constructed assuming that the companies with the distance within the interquartile range of the sets of the distances on the matrix are connected on the network. The constructions algorithm remove extreme connections remaining the typical ones. Finally, the following network parameters are discussed: node rank entropy, cycle entropy, clustering coefficient and transitivity coefficient. Particular interesting are changes triggered by the recent events – the significant changes observed in entropy time series indicated structural changes in the network of correlation structure induced by the pandemic.

Speaker: Janusz Miśkiewicz (Uniwersytet Wrocławski, Uniwersytet Przyrodniczy we Wrocławiu) -

8

Impact of multilayer topology on source localization in complex networks

It is common nowadays to have to deal with information spreading on multilayer networks and often identification of the origin of said propagation can be a crucial task. We examine the issue of locating the source of Susceptible-Infected spreading process in a multilayer network using the Bayesian inference and the maximum likelihood method established for general networks and adapted here to cover multilayer topology. We show how the quality of source identification depends on the network and spreading parameters and find the existence of two-parameter ranges with different behavior. If cross-layer spreading rate $\beta_C$ is low, observations in different layers interfere, lowering precision below that of relying on single layer observers only. On the other hand, if $\beta_C$ is high observations synergize, raising accuracy above the level of a single-layer network of the same size and observer density. We also show a heuristic method to determine in which mode is a system and therefore potentially improving the quality of source localization by rejecting interfering observations.

Speaker: Robert Paluch (Warsaw University of Technology)

-

5

-

S3Convener: Krzysztof Kułakowski (Faculty of Physics and Applied Computer Science, AGH University of Science and Technology)

-

9

Sociophysics: what was right with my failed prediction of a 2020 Trump victory?

Applying the Galam model of opinion dynamics to predict the outcome of the November 2020 US presidential election, I concluded on Trump victory at the edge. My prediction has failed. On the one hand, a massive Biden victory would have meant my failure was significant implying to revisit the basic elements of the model to find out which relevant ingredient has been missed. On the other hand, since Biden did win at the edge, instead of Trump, the model has proven robust with the error steaming from my rough estimate of respective proportions of inflexibles and prejudice effects. In my talk, I will first demonstrate that, although clear, Biden victory has been tight. Then, I will discuss what went wrong and what has been robust in the making of the prediction of a second Trump victory.

Reference

S. Galam, Will Trump win again in the 2020 election? An answer from a sociophysics model, Physica A 570 (2021) 125835

Speaker: Serge Galam (CEVIPOF, SciencesPo and CNRS) -

10

Modelling time-varying interactions in complex systems: the Score-Driven Kinetic Ising Model

A common issue when analyzing real-world complex systems is that the interactions that characterize them often change over time: this makes it difficult to find optimal models that describe this evolution and can be estimated from data, particularly when the driving rules are not known. Here we offer a new perspective on the development of models for time-varying interactions introducing a generalization of the well-known Kinetic Ising Model (KIM), a minimalistic pairwise constant interactions model which has found applications in multiple scientific disciplines. Keeping arbitrary choices of dynamics to a minimum and seeking information theoretical optimality, the Score-Driven methodology lets us significantly increase the knowledge that can be extracted from data using the simple KIM. In particular, we first identify a parameter whose value at a given time can be directly associated with the local predictability of the dynamics. Then we introduce a method to dynamically learn the value of such parameter from the data, without the need of specifying parametrically its dynamics. Finally, we extend our framework to disentangle different sources (e.g. endogenous vs exogenous) of predictability in real time. We apply our methodology to the high-frequency dynamics of stock prices, neuronal activity, extreme events, and temporal networks. Our results show that the Score-Driven KIM produces insightful descriptions of the observed processes, while keeping computational effort at a minimum, suggesting that our approach can be efficiently translated to other fields where the KIM has been extensively used.

Speaker: Fabrizio Lillo (University of Bologna and Scuola Normale Superiore (Italy)) -

11

Noisy voter model of the parliamentary attendance data

We examine parliamentary attendance data of the 2008–2012, the 2012–2016 and the 2016–2020 legislatures of Lithuanian parliament. In our exploration of the data set we consider cumulative attendance series of the representatives in the parliament as individual traces of the particles. In this scenario we observe that empirical series exhibit superdiffusive behavior. Similar observation was previously made by Vieira and others [1] in the Brazilian parliament attendance data.

We modify the well-known noisy voter model to allow reproduction of the attendance data. Namely, we assume the two states in the model to correspond to intention to attend or to skip the next session. We find that if the intentions are perfect (always result in the intended action), then the noisy voter model reproduces either normal diffusive behavior (if independent behavior dominates) or ballistic regime (if herding behavior dominates). Superdiffusive behavior can be observed only if the intentions are imperfect and only for a relatively narrow range of parameters (corresponding to a balance between independent and herding behavior).

We were able to find parameter set of the model [2], which matches anomalous diffusion observed in Lithuanian data reasonably well. To further verify similarity between the empirical and simulated data, we also compare the distributions of the presence and absence streaks.

- D. S. Vieira et al., PRE 99: 042141 (2019). doi:10.1103/PhysRevE.99.042141.

- A. Kononovicius, J. Stat. Mech. 2020: 063405 (2020). doi: 10.1088/1742-5468/ab8c39.

Speaker: Aleksejus Kononovicius (Institute of Theoretical Physics and Astronomy, Vilnius University) -

12

Vulnerabilities of democratic electoral systems

The vulnerability of democratic processes is under scrutiny after scandals related to Cambrige Analytica (2016 U.S. elections, the Brexit referendum, and elections in Kenya). The deceptive use of social media in the US, the European Union and several Asian countries, increased social and political polarization across world regions. Finally, there are straightforward frauds like Crimea referendum and Belarus elections. These challenges are eroding democracy, the most frequent source of governmental power, and raises multiple questions about its vulnerabilities.

Democratic systems have countless ways of performing elections, which create different electoral systems (ES). It is therefore in citizens' interest to study and understand how different ESs relate to different vulnerabilities and contemporary challenges. These systems can be analyzed using network science in various layers -- they involve a network of voters in the first place, a network of electoral districts connected by commuting flow for instance, or a network of political parties to give a few examples.

It is essential to provide new tools and arguments to the discussion on the evaluation of electoral systems. We aim at comparing different ESs in a dynamical framework. Our novel approach of analyzing electoral systems in such way with all its aspects included, from opinion dynamics in the population of voters to inter-district commuting patterns to seat appointment methods, will help answering questions like:

Which electoral systems are more predictable/stable under fluctuations?

Which electoral systems are the most robust (or vulnerable) under external and internal influences?

Which features of electoral systems make them more (less) stable?Speaker: Tomasz Raducha (Institute for Interdisciplinary Physics and Complex Systems (IFISC), Spain)

-

9

-

-

-

S4Convener: Stanisław Drożdż (Institute of Nuclear Physics Polish Academy of Sciences and Cracow University of Technology, Poland)

-

13

The `endo-exo' problem in complex systems (ecology, earthquakes, financial volatility, epileptic seizures…) and how to disentangle the long-term memory (Hurst, etc.) from time-varying trends?

The "endo-exo" problem in complex systems (ecology, earthquakes, financial volatility, epileptic seizures…) and how to disentangle the long-term memory (Hurst, etc.) from time-varying trends?

The "endo-exo" problem -- i.e., decomposing system activity into exogenous and endogenous parts -- lies at the heart of statistical identification in many fields of science. E.g., consider the problem of determining if an earthquake is a mainshock or aftershock, or if a surge in the popularity of a youtube video is because it is "going viral", or simply due to high activity across the platform. The "endo-exo" problem is also at the heart of a general description of the dynamics of out-of-equilibrium complex systems generalising the fluctuation-susceptibility theorem.

I will present recent exciting results obtained in my group, which include:

[1] S. Wheatley, A. Wehrli, and D. Sornette, The endo-exo problem in high frequency financial price fluctuations and rejecting criticality. Quantitative Finance 19(7), 1165-1178 (2019)

[2] A. Wehrli, S. Wheatley and D. Sornette, Scale-, time- and asset-dependence of Hawkes process parameters estimated on high frequency price change data, Quantitative Finance, doi:10.1080/14697688.2020.1838602 (2021)

[3] S. Nandan, S. K. Ram, G. Ouillon and D. Sornette, Is the Earth crust operating at a critical point? Phys. Rev. Lett. (in press) [arxiv:2012.06013]

[4] K. Kanazawa and Didier Sornette, Non-universal power law distribution of intensities of the self-excited Hawkes process: a field-theoretical approach, Phys. Rev. Lett. 125, 138301 (1-6) (2020)

[5] K. Kanazawa and D. Sornette, New universal mechanism for Zipf's law in nonlinear self-excited Hawkes processes, Phys. Rev. Lett. (submitted) [arxiv:2102.00242]Speaker: Didier Sornette (ETH Zürich) -

14

Social anatomy of a financial bubble

This work is about the social dynamics observed at the onset and during the evolution of a financial bubble. Our characterization detects demographic trends in the flux of new investors buying the Nokia asset, i.e. the most representative dotcom stock of the Nordic Stock Exchange during the dotcom bubble. We track the flux of new investors entering the market daily, and we yearly compare their demographic features with those of the whole Finnish population. As for many innovation product or services, we detect a bursty dynamics of access to the market by new investors. We investigate the attributes of age, postal code and gender, of new Nokia investors.

Speaker: Rosario Mantegna (University of Palermo, Italy) -

15

Multiplicity of social attributes enhances Heider interactions in social triads

Understanding how people interact, form friendships, and formulate opinions is vital for predicting evolution of social systems. Heider Balance Theory (HBT) takes into account dynamics of social triads by considering the well-known rules: a friend of my friend as well as an enemy of my enemy are my friends, and a friend of my enemy as well as an enemy of my friend are my enemies. To capture pair and triadic interactions we connected ideas of homophily and HBT and proposed a model of N agents possessing G attributes each. Edge weights and polarities are a consequence of attributes and in this way homophily and Heider interactions are naturally included. Using Fokker-Planck equation we obtained an analytical solution for a stationary density of link weights and found that a phase transition to the structurally balanced paradise state with positive links only exists in the regime G>O(N^2). This result suggests that the lack of structural balance observed in many social data can be explained by subcritical numbers of social attributes for interacting parties [1]. An extended version of this model was applied to the dataset of interacting students of University of Notre Dame. We found that Heider interactions are evident only when the social distance is measured by multidimensional attributes describing opinions of students on abortion, death penalty, euthanasia, gay marriage, homosexuality, marijuana use, politics and premarital sex. When opinions on each topic are considered separately from the others, the triadic interactions become negligible [2].

References

[1] PJ Górski, K Bochenina, JA Hołyst, RM D’Souza, Phys.Rev.Lett.125 (7), 078302 (2020).

[2] J.Linczuk, P.J.Górski, B.K.Szymański and J.A.Hołyst, submitted.Speaker: Janusz Hołyst (Faculty of Physics, Warsaw University of Technology)

-

13

-

S5Convener: Janusz Hołyst (Faculty of Physics, Warsaw University of Technology)

-

16

Towards the Heider balance – standards and surprises

The talk reports our search for a flexible formulation of the process of removal of cognitive dissonance when identifying enemies and friends. The list of methods includes sets of nonlinear differential equations and cellular automata with different neighborhoods. Results are described on the balanced-imbalanced phase transition on networks of different topology, jammed and dynamic states for symmetric social relations, and perhaps more.

Speaker: Krzysztof Kułakowski (AGH University of Science and Technology) -

17

Mutation of information in messages spreading processes in social networks

Lots of people get information from social media, where users can exchange messages. In most cases, content transmits without any changes. Sometimes, users modify form or content of the message before passing it on. Understanding the mechanism of message mutation could allow us to become more resistant to misinformation and better identify fake news.

We propose a simple model in which agents (users) can communicate with their neighbors by posting messages on their own page. A single message contains a negative, neutral or positive opinion on several topics. Users can transmit it as it is, create a new one, transmit with modification or ignore it. Messages are simplified to be a vector consisting of –1, 0, or 1, each component representing opinion on a specific topic. Cosine similarity between a vector of agent’s opinion and message content measures how much he agrees with it. Users do not transmit messages when the similarity is lower than a certain tolerance threshold – a model’s parameter.

We performed simulations to see how the mutation of information influences the spreading of messages in the network. It was foreseeable that when modifying messages was allowed, they could penetrate deeper into the network. However, we have observed that this happens only for a specific range of tolerance threshold, and if the threshold is too low or too high, the mutation does not play a significant role in the dynamics.

These results appear where the agent’s opinions are purely random. However, this is rarely the case in social networks, where people often seek to communicate with similar-minded individuals. To represent this, we have also performed simulations for the case where there is a varying degree of similarity between opinion vectors of connected agents.

Speaker: Patryk Bojarski (Warsaw University of Technology) -

18

Agent based model of anti-vaccination movements: simulations and comparison with empirical data

A realistic description of the social processes leading to the increasing reluctance to various vaccination forms is a very challenging task. This is due to the complexity of the psychological and social mechanisms determining the individual and group positioning versus vaccination and the associated activities. Understanding the role played by social media and the Internet in the current spread of the anti-vaccination (AV) movement is of crucial importance. We present long-term Big Data analyses of the Internet activity connected with the AV movement for such different societies as the US and Poland.

The datasets we analyzed cover multiyear periods preceding the COVID-19 pandemic,

documenting the vaccine-related Internet activity with high temporal resolution.

These activities show the presence of short-lived interest peaks, much higher than the low activity background. To understand the empirical observations we propose an Agent Based Model (ABM) of the AV movement including complex interactions between various types of agents and processes. The model reproduces the observed temporal behavior of the AV interest very closely.

The model allows studying strategies combating the AV propaganda. The increase of intensity of standard pro-vaccination communications by the government agencies and medical personnel is found to have little effect. On the other hand, focused campaigns using the Internet and copying the highly emotional and narrative format used by the anti-vaccination activists or censoring and taking down anti-vaccination communications by social media platforms can diminish the AV influence. The benefit of such tactics might, however, be offset by their social cost, for example, the increased polarization and increased persecution and martyrdom tropes.Speaker: Paweł Sobkowicz (Narodowe Centrum Badań Jądrowych) -

19

Controlling epidemic spread by social distancing

The COVID-19 outbreak has so far caused millions of cases and deaths globally. Human behaviour has been identified as the key factor in the spread of the SARS-CoV-2 virus and there is considerable interest in understanding the relationship between the way people act in response to the infection risk and the disease progress. Mathematical modelling has been at the centre of policy-making aimed at the prediction and control of the virus, using a wide range of approaches. We introduce a network model incorporating disease spread and individual decision making in response to disease risk. Disease spread is controlled by allowing susceptible individuals to temporarily reduce their social contacts in response to the presence of infection within their local neighbourhood. A cost is ascribed to the loss of social contacts, and weigh this against the benefit gained by reducing the impact of the epidemic. Depending on the characteristics of the epidemic and on the relative social importance of contacts versus avoiding infection, the optimal control is one of two extremes: either to adopt a highly cautious control, thereby suppressing the epidemic quickly by drastically reducing contacts as soon as the disease is detected; or else to forego control and allow the epidemic to run its course. The worst outcome arises when control is attempted but not cautiously enough to cause the epidemic to be suppressed. The model has been tested in experiments predating the COVID-19 outbreak when the observed response was too weak to halt epidemics quickly, resulting in a reduced attack rate but the longer duration and fewer social contacts, compared to no response. A comparison with the current pandemic shows the prevalence of repeated cycles of outbreaks caused by relaxation of response.

Speaker: Adam Kleczkowski (University of Strathclyde)

-

16

-

S6Convener: Bartlomiej Dybiec (Jagiellonian University)

-

20

From data to complex network control with applications to covid-19 pandemic and airline flight delays

Increasingly, vast data sets collected about complex network activities via monitoring and sensing devices define dynamics of these networks. This creates a challenge for controlling them, since it requires formal definition of network dynamics. In this talk, we introduce a framework for deriving the formal definition of complex network’s dynamics from data. It provides an important description of the network dynamic that focuses on its failures. Its control has multiple sources of cost, which are beyond the current scope of traditional control theory. We developed a method for converting network interaction data into continuous dynamics, to which we apply optimal control. In the talk, we describe this approach in two meaningful examples. In the first example, we focus on control of pandemics. We identify seven risks commonly used by governments to control COVID-19 spread. We illustrate how our method can control dynamics on this pandemic risk network optimally and analyze how the chosen control nodes affect total cost. We show that many alternative sets of controlling risks, different from commonly used ones, exist with potentially lower cost of control. The second example focuses on the air transportation network of the United States to abate its annoying frequent failures of flight delays. We build flight delay networks for each U.S. airline. Analyzing these networks, we uncover and formalize their dynamics. We use this formalization to design the optimal control for the flight delay networks. Our results demonstrate that the framework can effectively reduce the delay propagation and it significantly reduces the costs of delays to passengers, airlines and airports.

Speaker: Boleslaw Szymanski (Rensselaer Polytechnic Institute, Troy, NY, USA) -

21

Do economic effects of the anti-COVID-19 lockdowns in different regions interact through supply chains?

To prevent the spread of COVID-19, many cities, states, and countries have `locked down', restricting economic activities in non-essential sectors. Such lockdowns have substantially shrunk production in most countries. This study examines how the economic effects of lockdowns in different regions interact through supply chains, which are a network of firms for production, by simulating an agent-based model of production using supply-chain data for 1.6 million firms in Japan. We further investigate how the complex network structure affects the interactions between lockdown regions, emphasising the role of upstreamness and loops by decomposing supply-chain flows into potential and circular flow components. We find that a region's upstreamness, intensity of loops, and supplier substitutability in supply chains with other regions largely determine the economic effect of the lockdown in the region. In particular, when a region lifts its lockdown, its economic recovery substantially varies depending on whether it lifts the lockdown alone or together with another region closely linked through supply chains. These results indicate that the economic effect produced by exogenous shocks in a region can affect other regions and therefore this study proposes the need for inter-region policy coordination to reduce economic loss due to lockdowns.

Speaker: Hiroyasu Inoue (University of Hyogo)

-

20

-

Poster session

-

22

Bi-layer temporal model of echo chambers and polarisation

Echo chambers and polarisation dynamics are as of late a very prominent topic in scientific communities around the world. As these phenomena directly affect our lives, and seemingly more and more as our societies and communication channels evolve, it becomes ever so important to understand the intricacies of novel opinion dynamics in the modern era. We build upon an existing echo chambers and polarisation model and extend it onto a bi-layer topology allowing us to indicate the possible consequences of two interacting groups. We develop both agent-based simulations and mean field solutions showing that there are conditions in which the system can reach states of a neutral or polarised consensus, a polarised opposition, and even opinion oscillations.

Speaker: Łukasz Gajewski (Warsaw University of Technology) -

23

Three-state opinion q-voter model with bounded confidence

We study the q-voter model with bounded confidence on the complete graph. Agents can be in one of three states. Two types of agents behaviour are investigated: conformity and independence. We analyze whether this system is qualitatively different from a corresponding model without bounded confidence. Our main results are the following. Firstly, the system has two phase transitions: one between order-order phases and another between order-disorder phases. Secondly, the first transition is discontinuous in all analyzed cases, while the type of second transition depends on the size of group of influence.

Speakers: Maciej Doniec (wrocław university of science and technology), Wojciech Radosz (Wroclaw University of Science and Technology) -

24

Diffusion of innovation on networks: agent-based vs. analytical approach

We study an agent-based model of innovation diffusion on the Watts-Strogatz random graphs. The model is based on the $q$-voter model with a noise (with nonconformity, in the terminology of social psychology), which has been previously used to describe the diffusion of green products and practices. It originates from the $q$-voter model with independence, known also as the noisy nonlinear voter or the noisy $q$-voter model. In the original model states $\uparrow$ (yes/agree) and $\downarrow$ (no/disagree) are symmetrical and in case of independent behaviour each of them is taken with the same probability. However, when the model is used to describe diffusion of innovation the up-down symmetry is broken. We investigate the model analytically via mean-field approximation, which gives the exact result in case of a complete graph, as well as via more advanced method called pair approximation to determine how the average degree of the network influences the process of diffusion of innovation. Additionally, we conduct Monte Carlo simulations to check in which cases the agent-based model can be reduced to the analytical one and when it cannot be done. We obtain the $S$-shaped curve of the number of adopters in time that agrees with empirical observations. We also highlight that the time needed for adoption depends on model parameters. Furthermore, we present the trajectories and the stationary concentration of adopted for different sets of parameters to systematically analyze the model and determine when the adoption would fail.

Acknowledgement

This research is supported by project “Diamentowy Grant” DI2019 0150 49 financed by Polish Ministry of Science and Higher Education.Speakers: Mikołaj Szurlej (Wrocław University of Science and Technology), Angelika Abramiuk-Szurlej (Wrocław University of Science and Technology) -

25

Bias reduction in covariance matrices and its effects on high-dimensional portfolios.

The current world keeps evolving, and in our era the challenge of "too much data" keeps popping up, in particular in the world of portfolio optimization, therein lies the opportunity of providing more accurate results through the use of the relatively new tools developed in the random matrix theory literature to reduce the bias in the sample covariance matrix of some financial data (real or simulated). In this poster we will present a couple of these tools and a brief summary of the results that can be achieved with them.

In this work we will apply the clipping, Tracy-Widom, linear shrinkage and non linear shrinkage techniques as our bias reduction mechanisms, and then to compare their efficacy we will compare them by using the resulting estimators of the underlying covariance matrix to optimize our financial portfolios.

The financial portfolio model we use is the classic Markowitz model with fixed returns of one for all our assets and we use two variants, the first one with the only restriction that we must assign all of our capital into our selected assets, and the second one with the same restriction plus a second one in which we do not consider short selling or more specifically that we can only buy assets.

The data that we use also consists of synthetic and real data, the synthetic data consists of two types the first one is structured gaussian and the second one is from a simulated GARCH time series , while the real data consists of two portfolios one considering only traditional stocks and the other with a mix of stocks and cryptocurrencies.

We hope to determine the efficacy of the bias reduction techniques to optimize financial data under multiple scenarios.

Speaker: Benito Rodriguez Camejo (CIMAT Monterrey) -

26

The spread of ideas in a network – the garbage can model

The main goal of our work is to show how ideas change in social networks. Our analysis is based on three concepts: (i) temporal networks [1], (ii) the Axelrod model of culture dissemination [2], (iii) the garbage can model of organizational choice [3]. The use of the concept of temporal networks allows us to show the dynamics of ideas spreading processes in networks, thanks to the analysis of contacts between agents in networks. The Axelrod culture dissemination model allows us to use the importance of cooperative behavior for the dynamics of ideas disseminated in networks. In the third model decisions on solutions of problems are made as an outcome of sequences of pseudorandom numbers. The origin of this model is the Herbert Simon’s view on bounded rationality [4].

In the Axelrod model, ideas are conveyed by chains of symbols. The outcome of the model should be the diversity of evolving ideas as dependent on the chain length, on the number of possible values of symbols and on the threshold value of Hamming distance which enables the combination.[1] P. Holme and J. Saramaki, Temporal networks, Phys. Rep. 519, 97 (2012).

[2] R. Axelrod, The dissemination of culture: a model of local convergence and global polarization, J. of Conflict Resolution 41, 203 (1997).

[3] M. D. Cohen, J. G. March and J.P. Olsen, A garbage can model of organizational choice, Administrative Science Quarterly 17, 1 (1972).

[4] H. A. Simon, A behavioral theory of rational choice, The Quarterly J. of Economics 69, 99 (1955).Speaker: Dorota Żuchowska-Skiba (AGH Kraków) -

27

Multibranch multifractality and the phase transitions in the trading activity

Empirical time series of inter-event or waiting times are investigated using a modified Multifractal Detrended Fluctuation Analysis operating on fluctuations of mean detrended dynamics. The core of the extended multifractal analysis is the non-monotonic behavior of the generalized Hurst exponent $h(q)$ -- the fundamental exponent in the study of multifractals. The consequence of this behavior is the non-monotonic behavior of the coarse Hölder exponent $\alpha (q)$ leading to multi-branchedness of the spectrum of dimensions. The Legendre-Fenchel transform is used instead of the routinely used canonical Legendre (single-branched) contact transform. Thermodynamic consequences of the multi-branched multifractality are revealed. The results [1] are presented for the high-frequency data from Polish stock market (Warsaw Stock Exchange) for intertrade times for KGHM - one of the most liquid stocks there.

[1] J. Klamut, R. Kutner, T. Gubiec, and Z. R. Struzik, 'Multibranch multifractality and the phase transitions in time series of mean interevent times', Phys. Rev. E 101, 063303 (2020)

Speaker: Jarosław Klamut (University of Warsaw) -

28

Discontinous phase transitions in the generalized q-voter model on random graphs

We investigate the binary $q$-voter model with generalized anticonformity on random Erdős–Rényi graphs. The generalization refers to the freedom of choosing the size of the influence group independently for the case of conformity $q_c$ and anticonformity $q_a$. This model was studied before on the complete graph, which corresponds to the mean-field approach, and on such a graph discontinuous phase transitions were observed for $q_c>q_a + \Delta q$, where $\Delta q=4$ for $q_a \le 3$ and $\Delta q=3$ for $q_a>3$. Examining the model on random graphs allows us to answer the question whether a discontinuous phase transition can survive the shift to a network with the value of average node degree that is observed in real social systems. By approaching the model both within Monte Carlo (MC) simulations and Pair Approximation (PA), we are able to compare the results obtained within both methods and to investigate the validity of PA. We show that as long as the average node degree of a graph is relatively large, PA overlaps MC results. On the other hand, for smaller values of the average node degree, PA gives qualitatively different results than Monte Carlo simulations for some values of $q_c$ and $q_a$. In such cases, the phase transition observed in the simulation is continuous on random graphs as well as on the complete graph, whereas PA indicates a discontinuous one. We determine the range of model parameters for which PA gives incorrect results and we present our attempt at validating the assumptions made within the PA method in order to understand why PA fails, even on the random graph.

Speakers: Jakub Pawłowski (Wrocław University of Science and Technology), Mr Arkadiusz Lipiecki (Wrocław University of Science and Technology) -

29

From relation to interactions: a case study in Reddit website

Elucidating what factors are salient in emerging interactions in social networks is still an open question. Thus, we develop an agent-based model for generating interactions in signed networks. The ABMs, based on the Activity Driven Network model, use signed relations between agents to reproduce their interaction frequencies and crucial network distributions. The calibration and validation step is performed on the Reddit Hyperlink network, where agents are represented by subreddits (communities), and links by hyperlinks between communities. We devise a profound methodology to assess the performance of the models. The proposed ABM successfully reproduces basic node-link and higher-order statistics of the empirical dataset.

Speaker: Robert Jankowski (Faculty of Physics, Warsaw University of Technology) -

30

Information Measure for Long Range Correlated Time Series

We applied the moving average cluster entropy to study long-range correlation, dynamics and heterogeneity of financial time series, and to propose an alternative method to portfolio estimation.

The cluster entropy relies on the Shannon entropy $S(P_i) = - \sum_i P_i \ln P_i$. The probability distribution function of each asset $i$ is obtained intersecting the asset time series $y(t)$ with its moving average series $y_n(t)$. The portion of time series between two consecutive intersections of $y(t)$ and $y_n(t)$ is defined as a cluster. Then, $P(\tau,n)$, which associates clusters of duration $\tau$ to their frequency, is fed into the Shannon entropy to obtain the moving average cluster entropy. We summarize the results into the cluster entropy index $I(n)$, obtained integrating $S(\tau,n)$ over duration $\tau$.

We used high frequency financial time series of important market indexes (NASDAQ, DJIA, S\&P500, ...) of 2018. To study their dynamics, we analyzed twelve consecutive temporal horizon, summing up to a whole year.

We found positive long-range correlation for financial data, similar to fractional stochastic process with Hurst exponent $0.5\leq H\leq 1$.

Moreover, we compared our results to Kullback-Leibler entropy results based on the pricing kernel simulations for different representative agent models.We show that the cluster entropy of volatility series depends on the individual asset, while the cluster entropy of price series is invariant for different assets. Furthermore, we propose the cluster entropy portfolio. Portfolio weights are obtained from the cluster entropy index $I$. We also integrated horizon dependence. We show the cluster entropy portfolio ability to adjust to market dynamics by varying asset allocation.

Speaker: Pietro Murialdo (Politecnico di Torino) -

31

How social interactions lead to polarized relations?

In the age of social media there is more and more need to understand how do people create relations using those. The objective here was not only to look if relations are being created as a consequence of social interactions, but also to separate emergence of positive and negative relations. The knowledge we can gain here could benefit a wide spectrum of applications - from purely scietific to business.

In this work we used a dataset from Epinions website. In this dataset users of the website can create articles, rate them and declare trust or distrust towards other users. The consequence of giving somebody a distrust rating is to reduce the probability to see their articles in the future. In the model users are treated as agents in a network. They can be connected via signed edges which represent existence and polarization of their relations.

The focus of this work was put on the period where the declaration of trust was not yet established, as the impact of recommendation algorithm had to be avoided. We found that for any relation to be created, two agents/users need to interact more with each other. It might be counterintuitive if we think of negative relations as a consequence of avoiding the other person.

This research is one of the first steps in our more thorough work directed at understanding the topic of polarized relations and interactions.

This research received funding from National Science Centre, Poland Grant No. 2019/01/Y/ST2/00058.Speaker: Maciej Pawlik (Faculty of Physics, Warsaw University of Technology ) -

32

Archetypal analysis and classical segmentation methods. Comparison of two approaches on financial data.

Macroeconomic analyses are, to a large extent, based on firm segmentation and creating homogeneous groups of entities. Thanks to this procedure one can estimate indicators (e.g., various Key Performance Indicators) with high accuracy and examine trends of the market. Unfortunately, the drawback is that segmentation algorithms based on distance measures model average values and are not suitable to analysing unusual events. Modelling in Archetypal Analysis is done in a different way. Both approaches are used e.g., in marketing research, where on one hand one looks for target groups and on the other hand introduces active techniques in form of trend makers.

Archetypal Analysis was introduced in 1994 by Cutler and Breiman as a method that provides some kind of reference observations for given data. Archetypes are extreme observations, vertexes of convex hull of the data points obtained as a result of a two-stage nonlinear optimization.

The aim of our research is to compare results of analyses done using both, segmentation methods and averaging forecasts and Archetypal Analysis for firms listed on WSE and described by financial indicators (KPI). The authors propose an approach that brings together advantages of both methods. In order to compare Archetypal Analysis and the approach based on segmentation methods, the authors used financial data.Speaker: Urszula Grzybowska (Warsaw University of Life Sciences (SGGW)) -

33

Wealth transfer in an economy with two social groups

Social stratification, the division of society into groups, can result from historical and social factors and reflect economic inequality. Ethnicity, gender, and race are examples of subgroups where people belonging to one can be privileged in terms of status, power, and wealth. In fact, in many societies, dominant classes are composed of many people associated with one group, and economic inequality might be related to this non-economical classification.

We study an agent model where pair of individuals can exchange wealth with no underlying lattice. The wealth, risk aversion factor, and group of the agents characterize their state. The wealth exchanged between two agents is calculated by a fair rule, where both agents put the same amount at stake, i.e., no agent can win more than he/she is willing to lose. We consider two subsystems; interaction between agents can be among members of the same group or with the other group with different probabilities. Each subsystem has different protections to the poor agent, whereas the intergroup exchanges obey an exclusive protection rule, which can be understood as a public policy to reduce inequality.

Our results show that the most protected group shows more accumulated wealth, less inequality, and its agents have more chances of economic mobility. Another significant result is that the agents on the less protected group transfer their wealth to agents of the other group on average. The amount transferred depends on the relation of group internal social protection and the probability of agents of different groups interact.Speaker: Thiago Dias (Universidade Tecnológica Federal do Paraná) -

34

Hierarchy depth in directed networks

The term "hierarchy" when applied to networks can mean one of the few structures: simple order hierarchy meaning ordering of elements, nested hierarchy that is multi-level community structure or flow hierarchy defined by directed links that show causal or control structure in the network.

We introduce two measures of node depth for flow hierarchy in directed networks, measuring the level of hierarchy the nodes belong to. Rooted depth is defined as distance from specific root node and relative depth relies on directed links serving as "target node has larger depth than source" relations.

We explore the behavior of these two measures, their properties and differences between them. To cope with eventual directed loops in the networks we introduce loop-collapse method, that evens out depth values for all nodes in the same directed loop.

We investigate the behavior of the introduced depth measures in random graphs of different sizes and densities as well as some real network topologies. Maximum depth depends on network density, first increasing with mean degree, up to percolation threshold and declining afterwards as the number of loops increase.Speaker: Krzysztof Suchecki (Warsaw University of Technology) -

35

Scalable learning of independent cascade dynamics from partial observations

Spreading processes play an increasingly important role in modeling for diffusion networks, information propagation, marketing and opinion setting. We address the problem of learning of a spreading model such that the predictions generated from this model are accurate and could be subsequently used for the optimization, and control of diffusion dynamics. Unfortunately, full observations of the dynamics are rarely available. As a result, standard approaches such as maximum likelihood quickly become intractable for large network instances. We introduce a computationally efficient algorithm, based on a scalable dynamic message-passing approach, which is able to learn parameters of the effective spreading model given only limited information on the activation times of nodes in the network. We show that tractable inference from the learned model generates a better prediction of marginal probabilities compared to the original model. We develop a systematic procedure for learning a mixture of models which further improves prediction quality of the model.

More details at:

https://arxiv.org/pdf/2007.06557.pdfSpeaker: Mateusz Wilinski (Los Alamos National Laboratory) -

36

Discontinuous phase transitions in the multi-state noisy q-voter model: quenched vs. annealed disorder

We introduce a generalized version of the noisy q-voter model, one of the most popular opinion dynamics models, in which voters can be in one of s≥2 states. As in the original binary q-voter model, which corresponds to s=2, at each update randomly selected voter can conform to its q randomly chosen neighbors (copy their state) only if all q neighbors are in the same state. Additionally, a voter can act independently, taking a randomly chosen state, which introduces disorder to the system. We consider two types of disorder: (1) annealed, which means that each voter can act independently with probability p and with complementary probability 1−p conform to others, and (2) quenched, which means that there is a fraction p of all voters, which are permanently independent and the rest of them are conformists. We analyze the model on the complete graph analytically and via Monte Carlo simulations. We show that for the number of states s>2 model displays discontinuous phase transitions for any q>1, on contrary to the model with binary opinions, in which discontinuous phase transitions are observed only for q>5. Moreover, unlike the case of s=2, for s>2 discontinuous phase transitions survive under the quenched disorder, although they are less sharp than under the annealed one.

Speaker: Bartłomiej Nowak (Wrocław University of Science and Technology) -

37

Escaping polarization

One of the challenges faced by today's societies is to deal with the growing polarization. Here, we propose an agent-based model incorporating theories of structural balance and homophily. Most of the literature identify a structurally balanced state as a polarized one. However, we show that these two states are not always equivalent. We study a multilayer system with one layer related to the agents' relations and the others to the similarity between agents. We define the polarization as the state with two or more enemy groups in the relation layer and we study the influence of different types of homophily in the similarity layers and homophily strength on the fates of the system. We identify homophily types that are most efficient in limiting the system polarization.

This research received funding from National Science Centre, Poland Grant No. 2019/01/Y/ST2/00058.Speaker: Piotr Górski (Warsaw University of Technology, Faculty of Physics) -

38

The Economic Impact of Modern Maritime Piracy

I present the issue of quantifying the impact of modern maritime piracy practises on world trade. For this purpose the case of Somali piracy is investigated. Data is carefully analysed with the statistical methodology of modern empirical economics to identify the causal effect of pirate attacks on trade volumes and transportation costs. This is a good example for the detection of small causal effects that are drowned out by much larger fluctuations in the data, if these are not carefully removed. Furthermore, a gravity model for international trade is incorporated.

I find that piracy substantially reduces the amount of goods shipped through the affected area (average annual trade reduction of 4.3 billion USD from 2000 to 2019), while no significant effect on transportation cost can be found.

This research was conducted as part of my Master's thesis and is of relevance due to the long ongoing issue of appropriate anti-piracy measures. [T. Besley, T. Fetzer, and H. Mueller, "The welfare cost of lawlessness: Evidence from Somali Piracy", Journal of the European Economic Association 2015; A. Burlando, A. D. Cristea, and L. M. Lee, "The Trade Consequences of Maritime Insecurity: Evidence from Somali Piracy", Review of International Economics 2015]Speaker: Jana Meles (Politechnika Warszawa) -

39

Finding optimal strategies in the Yard-Sale model using neuroevolution techniques

A new type of in-depth microscopic analysis is presented for the Yard-Sale model, one of the most well known multi-agent market exchange models. This approach led to the classification and study of the individual strategies carried out by the agents undergoing transactions, as given by their risk propensity. These findings allowed to determine a region of parameters for which the strategies are successful, and in particular, the existence of an optimal strategy. To continue exploring this concept, a new approach is then proposed in which rationality is added in the agents behaviour through machine learning techniques. Strategies that maximize the individual wealth of each agent were then found by performing their training through a genetic algorithm. The addition of different levels of rationality given by the amount of available information from their environment showed new and promising results, both at the macroscopic and microscopic level. It was found that the addition of trained agents in these systems leads to an increase in wealth inequality at the collective level.

Speaker: Julian Neñer (Balseiro Institute) -

40

Generic Features in the Spectral Decomposition of Correlation Matrices

We show that correlation matrices with particular average and variance of the correlation coefficients have a notably restricted spectral structure. Applying geometric methods, we derive lower bounds for the largest eigenvalue and the alignment of the corresponding eigenvector. We explain how and to which extent, a distinctly large eigenvalue and an approximately diagonal eigenvector generically occur for specific correlation matrices independently of the correlation matrix dimension.

Speaker: Yuriy Stepanov -

41

In searching for possible relationships between the COVID-19 pandemic and the currency exchange rates via the Dynamic Time Warping method

The Covid-19 pandemic has affected not only economies of particular countries but the entire world economic system. It is not surprising that also currency exchange rates are not left untouched by the current crisis. The main objective of our study here is to assess the similarity between the time series of currency exchange rates and the Covid-19 time series (e.g., we investigate the relationship between the Euro/USD exchange rate and the ratio of Covid-19 daily cases in Eurozone and the USA). In other words, we want to quantitatively know how various currency exchange rates are associated with the observed development of the pandemic. To achieve this goal and to check if and to what extent the Covid-19 spread is related to the exchange rates, we employ the Dynamic Time Warping (DTW) method. Making use of the DTW method, a distance between analyzed time series can be defined and calculated. Having such a distance makes it possible to group currencies according to their change relative to the pandemic dynamics. Time shifts between daily Covid-19 events and currency exchange rates are also analyzed within the framework of the developed formalism.

Speaker: Arkadiusz Orłowski (Katedra Sztucznej Inteligencji, Instytut Informatyki Technicznej, SGGW w Warszawie, Poland) -

42

Weighted Axelrod model

The Axelrod model is a well known model of culture development and dissemination describing a possible mechanism for emergence of cultural domains. It is based on two sociological phenomena: homophily and the theory of social influence. Technically, it assumes that every culture is represented by a vector of F cultural traits (features), each taking any of q allowed opinions (values). The model assumes that an individual can interact with local neighbors if they share common traits. The agents are conservative in the sense that they are more likely to interact with other agents who are similar to them. At every successful interaction, one of the interacting agents accepts the agent’s point of view on a topic on which both agents differ. Consequently, interactions increase the similarity between agents and make them even more likely to interact in the future. The Axelrod model allows for coexistence of multiple cultural domains where neighboring cultures are completely different.

The Axelrod model does not take into account the fact that cultural attributes may have different significance for a given individual. This is a limitation in the context of how the model reflects the mechanisms driving the evolution of real societies. The model is modified by giving individual features different weights that have a decisive impact on the possibility of changing the opinion and in turn on interactions between two individuals. Introduced weights have a significant impact on the system evolution, in particular they increase the polarization of the system in the final state.

[1] R. Axelrod, J. Conflict Resolt. 41, 203 (1997).

[2] B. Dybiec, N. Mitarai and K. Sneppen, Eur. Phys. J. B 85, 357 (2012).Speaker: Zuzanna Kalinowska (Faculty of Physics, Astronomy and Applied Computer Science, Jagiellonian University)

-

22

-

-

-

S7Convener: Zdzisław Burda (AGH University of Science and Technology)

-

43

The Dynamics of the Interbank Market: Statistical Stylized Facts and Agent-Based Models

We review a number of basic stylized facts of the interbank market that have

emerged from the empirical literature over the last years. Our objective is to explain these

findings as emergent properties of dynamic agent-based model of the interaction within the

banking sector. To this end, we develop a simple dynamic model of interbank credit

relationships. Starting from a given balance sheet structure of a banking system with a

realistic distribution of firm size, the necessity of establishing interbank credit connections

emerges from idiosyncratic liquidity shocks. Banks initially choose potential trading partners

randomly, but form preferential relationships via an elementary reinforcement learning

algorithm. As it turns out, the dynamic evolution of this system displays a formation of a

core-periphery structure with mostly the largest banks assuming the roles of money center

banks mediating between the liquidity needs of many smaller banks. Preferential interest rates

for borrowers with strong attachment to a lender prevent the system from becoming

extortionary and guarantee the survival of the small periphery banks.Speaker: Thomas Lux (University of Kiel) -

44

“Private Truths, Public Lies” within agent-based modeling.

The title of this talks is inspired by the Timur Kuran’s book entitled “Private Truths, Public Lies. The Social Consequences of Preference Falsification”. During my presentation I will talk about the idea and real-life examples of Preference Falsification (PF). Furthermore, I will propose a simple binary agent-based model, which allows to describe PF by introducing two levels of the opinion: the public and the private one. Finally, I will discuss how PF can help in explaining social and political dynamics.

Speaker: Katarzyna Sznajd-Weron (Department of Theoretical Physics, Faculty of Fundamental Problems of Technology, Wroclaw University of Science and Technology) -

45

Education as a Complex System: Using physic methods for the analysis of students' learning behaviours in the context of programming education.

As a result of the COVID19 pandemic, more higher-level education courses have moved to online channels, raising challenges in monitoring students’ learning progress. Thanks to the development of learning technologies, learning behaviours can be recorded at a more fine-grain level of detail, which can then be further analysed. Inspired by approaching education as a complex system, this research aims to develop a novel approach to analyse students’ learning behavioural data, utilising physical methods. First, essential learning behavioural features are extracted. Second, a range of techniques, e.g., Random Matrix Theory and Community Detection techniques, were utilised to clean the noise in the data and cluster the students into groups with similar learning behavioural characteristics. The proposed methods have been applied to datasets collected from an online learning platform in an Irish University. The datasets contain information of more than 500 students in different programming-related modules over three academic years (2018 to 2021). Results indicate the similarity and deviation of learning behaviours between student cohorts. Overall, students interacted similarly with all course resources during the semester. However, while higher-performing students seem to be more active in practical tasks, lower-performing students have been shown to have more activities with lecture notes and have lost their focus at the later phase of the semester. Additionally, the student learning behaviours in a conventional university setting tend to be significantly different to the students in a fully online setting during the pandemic. Recommendations from the work for current educational practice are made.

Speaker: Tai Mai (Dublin City University) -

46

Biased voter model: How persuasive a small group can be?

We study the voter model dynamics in the presence of confidence and bias. We assume two types of voters. Unbiased voters (UV) whose confidence is indifferent to the state of the voter and biased voters (BV) whose confidence is biased towards a common fixed preferred state. We study the problem analytically on the complete graph using mean field theory and on a random network topology using the pair approximation, where we assume that the network topology is independent of the type of voters. We verify our analytical results through numerical simulations. We find that for the case of a random initial setup, and for sufficiently large number of voters N, the time to consensus increases proportionally to log(N)/γv, with γ the fraction of biased voters and v the bias of the voters. Finally, we study this model on a biased-dependent topology. We examine two distinct, global average-degree preserving strategies to obtain such biased-dependent random topologies starting from the biased-independent random topology case as the initial setup. We find that increasing the average number of links among only biased voters (BV-BV) at the expense of that of only unbiased voters (UV-UV), while keeping the average number of links among the two types (BV-UV) constant, resulted in a significant decrease in the average time to consensus to the preferred state in the group. Hence, persuasiveness of the biased group depends on how well its members are connected among each other, compared to how well the members of the unbiased group are connected among each other.

Speaker: Christos Charalambous

-

43

-

S8Convener: Katarzyna Sznajd-Weron (Department of Theoretical Physics, Faculty of Fundamental Problems of Technology, Wroclaw University of Science and Technology)

-

47

Portfolio selection, shrinkage estimators and correlated samples

The problem of estimating covariance matrix plays a fundamental role in portfolio selection. Recently a new estimator of large-dimensional covariance matrices has been proposed to reduce out-of-sample risk of large portfolios. The estimator is called non-linear shrinkage estimators. We derive an analytic formula for the non-linear shrinkage estimator of large dimensional covariance for correlated samples.

Speaker: Zdzisław Burda (AGH University of Science and Technology) -

48

Critical phenomena in the market of competing firms induced by state interventionism

There are two primary goals of this talk. First, we propose a flexible algorithm that can simulate various scenarios of state/government intervention. Secondly, we analyze in detail the scenario with the widest possible spectrum of stationary states. It exhibits the critical behavior of the market of competing firms, depending on the degree of government intervention and the activity level of the firms. Thus, we have analyzed the second-order phase transition series, finding the levels of critical intervention and the critical exponent values. As a result of this phase transition, we have observed an "unlimited" increase of fluctuations at each critical intervention level and the local breakdown of the average market technology concerning the frontier technology therein.

Speaker: Ryszard Kutner (Faculty of Physics University of Warsaw) -